AI Plagiarism

AI Copyright

The definitive 128-page summary of copyright issues, written by a team at Cornell law school. Talkin’ ’Bout AI Generation: Copyright and the Generative AI Supply Chain

Also see The Verge AI Companies list of reasons against against paying for copyrighted data. Especially note the Fair Use decision of Sega v. Accolade, in which the 9th Circuit concluded that it’s okay to make an intermediate copy of something in order to reverse-engineer it.

One defense by OpenAI is to claim that NYTimes articles themselves aren’t original enough to be copyrightable. Although this seems an unlikely defense—proposed mostly as a negotiating tactic by forcing burdomsome discovery on NYTimes—it brings up an interesting issue. How original are most journalist articles anyway. For example, if a journalist merely copies verbatim a press release, is it really copyrightable?

What if all the journalists who attend a White House press briefing return with reports that use similar language?

“Why I just resigned my job in generative AI” is a counter-argument from Ed Newton-Rex, who quit as VP Audio at Stability AI because he thinks AI-generated content is stealing. > I disagree because one of the factors affecting whether the act of copying is fair use, according to Congress, is “the effect of the use upon the potential market for or value of the copyrighted work” >

Fake News

A 2017 paper, 3HAN: A Deep Neural Network for Fake News Detection claims to detect fake news through some kind of word vector that breaks apart the text. It’s unclear whether it actually flags news it has determined as false, or whether it simply relies on sensationalism clues. #todo Look for more recent work, perhaps by searching for papers that cite this one.

I suppose in theory you could try to detect how different one news item is from the “consensus”.

AI-Generated Content

The Verge Jul 2024 “Chum King Express” describes a secretive operation allegedly led by Ben Faw, a cofounder of BestReviews (owned by Chicago Tribune). They use AI to generate fake product reviews that are sold to SEO specialists and placed in legitimate outlets. Companies described include SellerRocket and AdVon

flooding Google with clickable, thin content; accelerating output at the cost of quality work; and trying to replace knowledgeable humans with cheaper machines.

In early April 2023, I noticed Amazon lists a dozen self-published books by “Lorraine Henwood” uploaded in the past week, including a workbook for “Age of Scientific Wellness”. By May the “workbooks” had been removed except for one workbook about “The Wisdom of Morrie”.

Plagiarism Detection

OpenAI concludes AI writing detectors don’t work

In a section of the FAQ titled “Do AI detectors work?”, OpenAI writes, “In short, no. While some (including OpenAI) have released tools that purport to detect AI-generated content, none of these have proven to reliably distinguish between AI-generated and human-generated content.”

The NYTimes challenges a bunch of AI Image detectors against real and MidJourney-generated images How Easy Is It to Fool A.I.-Detection Tools?, concluding it’s very hard to tell the difference, especially if an image has been resized or otherwise altered. Conclusion: rely on watermarks.

Alberto Romera at The Algorithmic Bridge:

(If you want to read a more in-depth analysis of how exactly detectors work and fail, I recommend you to check this overview by AI researcher Sebastian Raschka where he reviews the four main types of detectors and explains how they differ. For a hands-on assessment, I loved this article by Benj Edwards on Ars Technica.)

see https://gptzero.substack.com/

currently the app uses a few properties, perplexity (randomness of a text to a model, or how well a language model likes a text); and burstiness (machine written text exhibits more uniform and constant perplexity over time, while human written text varies hosted on Streamlit

Manually Detect AI

Jim the AI Whisperer offers several tips, like misspellings, over-reliance on specific words (“crucial”, “delve, dive, discover”) or pat phrases like a metaphor followed by a tricolon, “catachresis” (a phrase that’s so right it’s wrong, like “Because AI”).

He claims that many RLHF tasks are outsourced to other countries.

These nations — with their complex historical interactions with English language due to colonial legacies — may overrepresent formal or literary terms like ‘delve’ within their feedback, which the AI systems then adopt disproportionately.

Excess words on ChatGPT https://arxiv.org/pdf/2406.07016

Delving into ChatGPT usage in academic writing through excess vocabulary

A Github list of ChatGPT excess words

Of course, the best way to tell if it’s an AI is if it says something politically incorrect. In the old days, you could prove your seriousness by using words that were frowned on in “polite company”—taking the Lord’s name in vain, for example, or a barnyard expletive to prove your point.

Now a reference to something outside the Overton Window of acceptable discourse is a similarly foolproof way to prove your humanity. No big company would risk generating words or ideas that society deems offensive. A remark that “perpetuates stereotypes”? Anything less than enthusiastic support of a demographic minority? Forbidden epithets the disparage some group? Someday that’ll be the only way to prove you’re not the writing of a corporate-controlled LLM.

Plagiarism Prevention

An open technical standard from Adobe/Microsoft/etc providing publishers, creators, and consumers the ability to trace the origin of different types of media.

OpenAI announced support, plus additional APIs and an upcoming Media Managerthat will make it possible for content creators to opt out.

To drive adoption and understanding of provenance standards - including C2PA - we are joining Microsoft in launching a societal resilience fund(opens in a new window). This $2 million fund will support AI education and understanding, including through organizations like Older Adults Technology Services from AARP(opens in a new window), International IDEA(opens in a new window), and Partnership on AI(opens in a new window).

Adobe calls it “content credentials” and it works by encoding provenance information through a set of hashes that cryptographically bind to each pixel

But Fast Company thinks It may just confuse things more

the smallest adjustments made to real images can be flagged as more questionable than completely made up pictures.

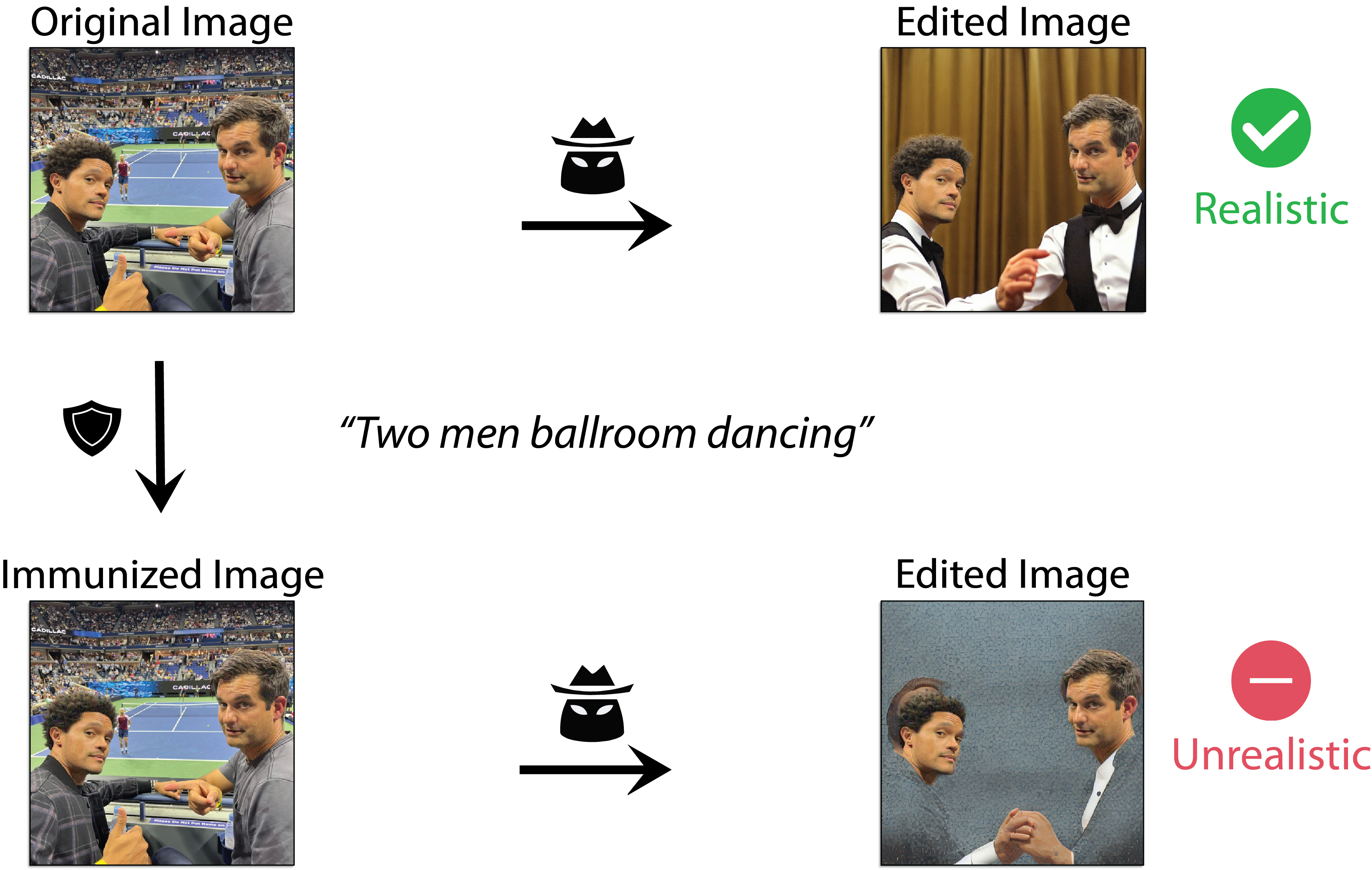

Photoguard developed at MIT (VentureBeat)

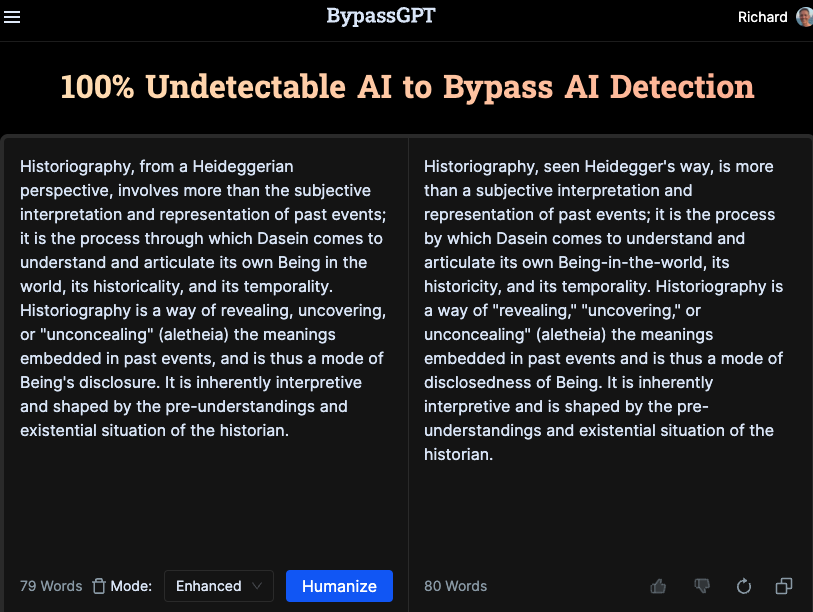

Humanize AI Output

BypassGPT claims to adjust any text snippet to make it pass AI plagiarism detectors including CopyLeaks.

Undetectable.ai does something similar ($15/month, with 250 free credits).

My experience

This guy took my DeSci piece, rewrote it slightly, and posted it yesterday to his LinkedIn account of 5000 followers:

https://www.linkedin.com/feed/update/urn:li:activity:6998831186554859520/

500 reactions already

Every paragraph is just a rewrite. I wrote this:

In a DeSci world, the indelible nature of the blockchain closes off many sources of outright fraud. Smart contracts, by eliminating humans from the loop, can’t be bribed or intimidated, for example.

He writes this:

The indelible nature of the blockchain eliminates several sources of blatant #fraud in a #DeSci society. Smart contracts, by removing humans from the loop, cannot be bribed or intimidated.

The whole piece is like this!

2022-12-01 4:33 PM